blog

Managing Database Backup Retention Schedules

Attention: Skip reading this blog post if you can afford unlimited storage space.

If you could afford unlimited storage space, you wouldn’t have to worry about backup retention at all, since you could store your backups infinitely without any restriction, provided your storage space provider can assure the data won’t be missing. Database backup retention is commonly overlooked because it doesn’t seem important at first, and only comes into sheer attention when you have stumbled upon resource limit or hit a bottleneck.

In this blog post, we are going to look into database backup retention management and scheduling and how we can manage them efficiently with ClusterControl.

Database Backup Retention Policy

Database backup retention policy refers to how long the database backups are kept within our possession. Some examples would be:

- daily backups for big databases are kept for one week using local storage,

- weekly backups for small databases are kept for eight weeks on disk storage (both local and remote),

- monthly backups for all databases are kept for 3 months on cloud storage,

- no backups are saved beyond 3 months.

The main advantage of having a database backup retention policy is to make sure we efficiently manage our storage resources, without impacting our database recovery process if something goes wrong. You don’t want to get caught up when an urgent recovery is needed and the necessary backup file is no longer there to help us perform restoration because we had got it deleted to clear some space up.

To build a good backup retention policy, we need to consider the two most important aspects:

- Backup storage size.

- Database backup size.

Backup Storage Size

The first priority is to ensure that we have enough space to store our backups as a starter. A simple rule of thumb is the storage space must at least have the same size as the data directory size for the database server. Generally, the bigger the storage size, the bigger the cost is. If you can opt for a bigger storage space, you can keep older backups longer. This aspect hugely influences your retention policy in terms of the number of backups that you can store.

Storing the backups off-site, in the cloud, can be a good way to secure your backups against disaster. It comes with a higher price per GB ratio but it’s still affordable considering the advantages that you will get from it. Most of the cloud storage providers now offer a secure, scalable, highly available with decent IO performance. Either way, ClusterControl supports storing your backup in the local storage, remote storage or in the cloud.

Database Backup Size

The size of backups are directly affected by the following factors:

- Backup tools – Physical backup is commonly bigger than logical backup.

- Backup method – Incremental and partial backups are smaller than a full backup.

- Compression ratio – Higher compression level produces smaller backup, with a tradeoff of processing power.

Mix and match these 3 factors will allow you to have a suitable backup size to fit into your backup storage and restoration policy. If storing a full backup is considered too big and costly, we can combine incremental backups with a full backup to have a backup for one particular set. Incremental backups commonly stores the delta between two points, and usually only takes a relatively small amount of disk space if compared to a full backup. Or, you can opt for a full partial backup, just backs up chosen databases or tables that can potentially impact the business operation.

If a full physical backup with a compression ratio of 50%, producing 100M of backup size, you could increase the compression ratio to 100% in order to reduce the disk space usage but with a slower backup creation time. Just make sure that you are complying with your database recovery policy when deciding which backup tools, method and compression ratio to use.

Managing Retention Schedules Using ClusterControl

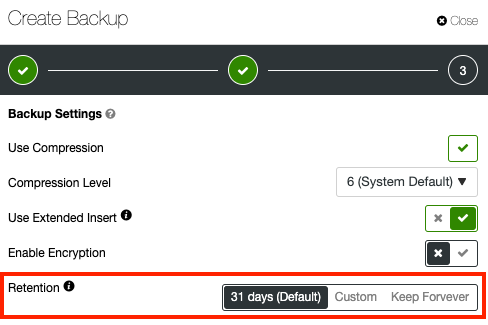

ClusterControl sophisticated backup management features includes the retention management for all database backup methods when creating or scheduling a backup:

The default value is 31 days, which means the backup will be kept in possession for 31 days, and will be automatically deleted on the 32nd day after it was successfully created. The default retention value (in day) can be changed under Backup Settings. One can customize this value for every backup schedule or on-demand backup creation job to any number of days or keep it forever. ClusterControl also supports retention for backup that is stored in the supported cloud platforms (AWS S3, Google Cloud Storage and Azure Blob Storage).

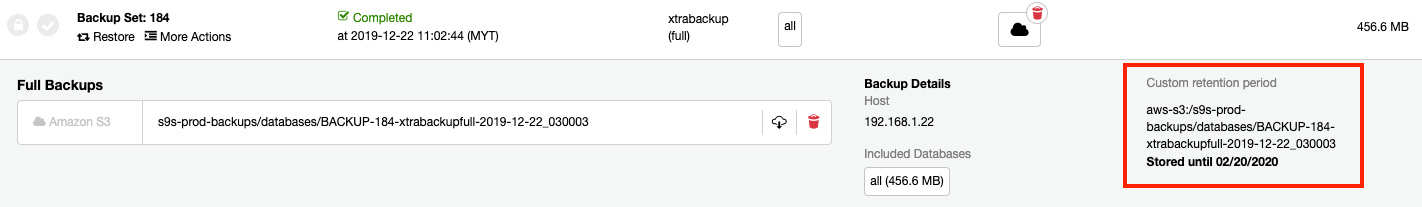

When a backup is successfully created, you will see the retention period in the backup list, as highlighted in the following screenshot:

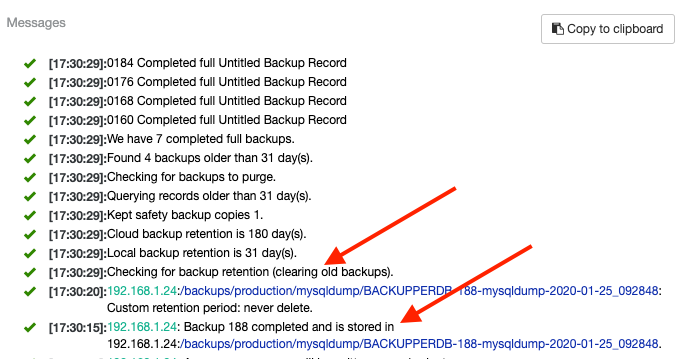

For backup purging process, ClusterControl triggers backup purge thread every single time after any backup process for that particular cluster is completed. The purge backup thread looks for all “expired” backups and performs the necessary deletion process automatically. The purging interval sounds a bit excessive in some environments but this is the best purging scheduling configuration that we have figured out for most configurations thus far. To understand this easily, consider the following backup retention setting for a cluster:

- One creates a weekly backup, with a retention period of 14 days.

- One creates an hourly backup, with a retention period of 7 days.

- One creates a monthly backup, without a retention period (keep forever).

For the above configuration, ClusterControl will initiate a backup purge thread for (a) and (b) every hour because of (b), although the retention period for (a) is 14 days. Created backups that have been marked as “Keep Forever” (c) will be skipped by the purge thread. This configuration protects ClusterControl from excessive purging if the job is scheduled daily. Thus, don’t be surprised if you see the following lines in job messages after any of the backup job is completed:

Advanced Retention Management with ClusterControl CLI

ClusterControl CLI a.k.a s9s, can be used to perform advanced retention management operations like deleting old backup files while keeping a number of copies exist for safety purpose. This can be very useful when you need to clear up some space and have no idea which backups that will be purged by ClusterControl, and you want to make sure that a number of copies of the backup must exist regardless of its expiration as a precaution. We can easily achieve this with the following command:

$ s9s backup

--delete-old

--cluster-id=4

--backup-retention=60

--cloud-retention=180

--safety-copies=3

--log

Deleting old backups.

Local backup retention is 60 day(s).

Cloud backup retention is 180 day(s).

Kept safety backup copies 3.

Querying records older than 60 day(s).

Checking for backups to purge.

No old backup records found, nothing to delete.

Checking for old backups is finished.The above job will force ClusterControl to look for local backups that have been created which are older than 60 days and backups that are stored in the cloud which are older than 180 days. If ClusterControl finds something that matches this query, ClusterControl will make sure only the 4th copy and older will be deleted, regardless of the retention period.

The –backup-retention and –cloud-retention parameters accept a number of values:

- A positive number value can control how long (in days) the taken backups will be preserved.

- -1 has a very special meaning, it means the backup will be kept forever.

- 0 is the default, it means prefer the global setting which can be configured from the UI.

Apart from the above, the standard backup creation job can be triggered directly from the command line. The following command create a mysqldump backup for cluster ID 4 on node 192.168.1.24, where we will keep the backup forever:

$ s9s backup --create

--backup-method=mysqldump

--cluster-id=4

--nodes=192.168.1.24:3306

--backup-retention=-1

--log

192.168.1.24:3306: Preparing for backup - host state (MYSQL_OK) is acceptable.

192.168.1.24:3306: Verifying connectivity and credentials.

Checking backup creation job.

192.168.1.24:3306: Timezone of backup host is UTC.

Backup title is ''.

Backup host is 192.168.1.24:3306.

Backup directory is /backups/production/mysqldump/.

Backup method is mysqldump.

PITR compatible no.

Backup record created.

Backup record saved.

192.168.1.24: Creating backup dir '/backups/production/mysqldump/BACKUPPERDB-190-mysqldump-2020-01-25_093526'.

Using gzip to compress archive.

192.168.1.24:3306: detected version 5.7.28-31-log.

Extra-arguments be passed to mysqldump: --set-gtid-purged=OFF

Backup (mysqldump, storage node): '192.168.1.24: /usr/bin/mysqldump --defaults-file=/etc/my.cnf --flush-privileges --hex-blob --opt --master-data=2 --single-transaction --skip-lock-tables --triggers --routines --events --set-gtid-purged=OFF --databases mysql backupninja backupninja_doc proxydemo severalnines_prod severalnines_service --ignore-table='mysql.innodb_index_stats' --ignore-table='mysql.innodb_table_stats' |gzip -c > /backups/production/mysqldump/BACKUPPERDB-190-mysqldump-2020-01-25_093526/mysqldump_2020-01-25_093546_dbdumpfile.sql.gz'.

192.168.1.24: MySQL >= 5.7.6 detected, enabling 'show_compatibility_56'

192.168.1.24: A progress message will be written every 1 minutes

192.168.1.24: Backup 190 completed and is stored in 192.168.1.24:/backups/production/mysqldump/BACKUPPERDB-190-mysqldump-2020-01-25_093526.

192.168.1.24:/backups/production/mysqldump/BACKUPPERDB-190-mysqldump-2020-01-25_093526: Custom retention period: never delete.

Checking for backup retention (clearing old backups).

Local backup retention is 31 day(s).

Cloud backup retention is 180 day(s).

Kept safety backup copies 1.

Querying records older than 31 day(s).

Checking for backups to purge.

Found 4 backups older than 31 day(s).

We have 9 completed full backups.For more explanation and examples, check out the s9s backup guide.

Conclusion

ClusterControl backup retention management allows you to manage your backup storage space efficiently, without compromising your database recovery policy.